Font Size

Category: AI, ML and LDNLS

by Admin on Tuesday, October 26th, 2010 at 23:34:37

I do some work in the field of artificial intelligence. I also do related work along the lines of artificial life and evolutionary software. One consequence of this is that I am often exposed to opinions and ideas from others with the same interests and/or pursuits. Here, I'm going to take on — and take down — one of the less well thought-out ideas that are currently making the rounds; that idea that, in order to have intelligence, that device must also have a body.

Where does this idea come from, you may ask? Professor Alan Winfield, Hewlett Packard professor of electronic engineering at the University of the West of England, says "embodiment is a fundamental requirement of intelligence in general" "a disembodied intelligence doesn't make sense." Susan Greenfield, professor of pharmacology at Oxford University's Lincoln College, says "My own view is that you can't disembody the brain."

So there's the setup, as it were. Here's the knockdown.

If a person is born deaf, do they fail to develop intelligence? No. If deaf and blind? No. If deaf, dumb and blind? No. Further, if a deaf, dumb and blind person suffers a spinal injury and loses nervous system contact with the body, do they suddenly become unintelligent? No. And so it goes. Intelligence is not about any particular sense, and it is not about mobility, nor, in the end, is it about structure.

In a (horrible, sorry) example, if I could support your brain's nutritional requirements (a matter of circulating properly prepared blood plasma) and gently, using anesthetics and various healing techniques, extract your brain from your skull along with whatever few bodily widgets that also directly affect your thinking (a few glands here and there, primarily), you'd still be intelligent. You'd probably be pretty unhappy, and perhaps you'd go insane sooner rather than later, but insanity is still a consequence of intelligence — it takes a lot of thinking power to support an illusory image of the world when your previous experience has taught you differently — and even more, to believe and act within the bounds of that illusion.

Furthermore, If I could surgically remove a good bit of that brain — the parts governing your breathing, your heartbeat, your sense of balance, pain... quite a list, actually — you would still be able to demonstrate that you are intelligent. Numerous case studies of specific brain injuries with exactly those consequences bear this assertion out. In another (horrible) example, when considering proactive surgical procedures from frontal lobotomies to brain surgeries that remove significant chunks of the brain (for example, tumor removal), it is almost trivial to demonstrate that the entirety of the brain isn't required for intelligence to remain a product of the (remaining) structure.

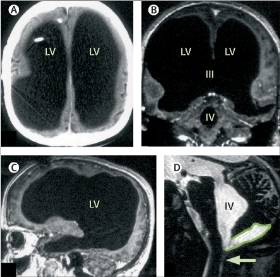

One of my favorite (also horrible) examples is this poor English fellow who was born with a problem of excess fluid in his skull. They inserted a tap, he grew up essentially normally, and the tap was removed at age 14 for whatever reason. But when, as an adult many years later, his head was scanned, it was found that the fluid problem had returned, and that his brain was occupying a very small fraction of the space inside his skull. Of the four images at the right, the left side is him, and the right side is a normal brain.

Was this fellow still intelligent? Yes, absolutely. His IQ tests returned a score of about 75; he had a wife and two children and worked as a civil servant. So this is a solid example that shows that the argument that we must have a cell count equivalent to a normal human brain in order for intelligence to exist is also shown to be absolutely false. It also brings up some very interesting questions about animal (non-human animals, I mean) intelligence based on brain volume. Which I will spare you in this post out of the kindness of my heart.

So clearly, intelligence is strictly an intrinsic, emergent function of just the brain. It is about the ability to conceive ideas; to remember states like feelings and concepts; to recall these, or build upon them, or use them to alter the building of something else, that defines actual intelligence. Memory, induction, reduction, deduction, classification... these sorts of things.

Yes, certainly, we're used to intelligence being most obvious when presented to us in classic human form. But the fact is, this is correlation; not causation. The above explanations demonstrate why.

Now, I'm not disputing that we got our intelligence in the course of evolving bodies and senses and so forth; but that process has been and gone, and now we have our intelligence, and experience with the disabled unquestionably shows that even if evolution required bodies for its development process, the actual result does not.

Submersing yourself in an isolation tank and losing all sense of input and output doesn't instantly make you unintelligent. Because those things are irrelevant to the mechanism that intelligence manifests itself from; they're just input channels intelligence can usefully process which is in no way the same as intelligence itself. Left to your own devices in such a tank, you will process information you already have, or perhaps create new information and process it. So again, it is very clear that intelligence is a product of the brain and the brain alone.

I think I've solidly established that the problem of AI is not related to bodies in any way, shape or form. So what is the problem of AI, then? Well, it is both considerably simpler than that, and much, much more difficult.

For intelligence to be recognized by us, it will need input to think about, and output to communicate what it has thought so we may be aware that it is thinking (and likely so that it is aware that we are there and we are so aware, thus creating an interaction, hopefully a useful one.)

This is trivial. Feed it text, and take text output. You now have something infinitely more capable than a blind, deaf, dumb, spinally injured human being — something with broadly capable input and output communications methods. Perhaps equivalent to a chat window open to someone isolated in the arctic, or in space. There's no serious limit to what you can communicate using this method. Some things are tougher than others, and individual abilities vary, but even the least articulate among us — whose presence is painfully obvious on Internet chat boards, I might add — are generally acknowledged to still be intelligent in every sense of the word.

Even if there's something you find you can't describe in such a channel, or that cannot be described to you by the entity on the other end, this does not in itself indicate that intelligence is not present.

So one perfectly acceptable version of the AI task is: Create a mind with (at least) those input and output methods. And there is the problem of AI, isolated properly, the incorrect "needs a body" argument fully disposed of, and all the issues of body and bodily senses disposed of.

Solve the actual AI problem, and be a hero. And there is every indication it will be solved.

I say that because there is nothing known about the human brain that even hints at capabilities that cannot be performed by digital systems, or that hints at components that work in ways not completely known to us via our current understanding of physics.

Speed isn't even really an honest issue — if I give you an answer, intelligently derived, in a minute, or the same answer a day later, or the same answer a century later, that answer is no less intelligent in any of the three cases, it just becomes more of a communications and social problem as more time passes.

Hence it is obvious that once a set of working algorithms are discovered, we can turn around and trivially determine the minimum acceptable hardware set for those. Which I suspect is already sitting on some people's desks, or could be.

As I write this in October of 2010, a terabyte of slow storage is about $60, and a GB of 1 GHz RAM is about $40.00 (both are US dollar prices.) A modern CPU can easily address 4 GB with a 32 bit address buss, and 16 billion gigabytes with a 64-bit bus, which is what my own current desktop, a Mac Pro, uses. In a 64-bit environment, eight bytes suffice to create a link to any kind of data anywhere in the CPU's RAM address space; and data structures can be anything at all, with as many links in, out or both as needed. Text is trivially stored as one character per byte, and can be losslessly compressed to less than that if the compute time consumed in such a compression is acceptable.

A little hackery requiring technical skills at about the level of any reasonable EE graduate, and it's just a matter of money making all that RAM and pretty much any amount of slow storage you can imagine available to a CPU.

The fact that we haven't solved the AI problem yet is, I think, more of a reflection that we don't understand the problem than it is that the problem itself cannot be solved. Consider the two academics I quoted at the start of this post; it astonishes me that they have failed to work out the flaws in their argument, and yet, there they are, proceeding from an entirely false premise and therefore making the task even more complex than it already is. Perhaps they will come across my post here. I hope so.

I agree, they do not. But you assume they have acquired intelligence before becoming deaf, blind, etc.

I posit that human intelligence is a capability of the brain, not the senses, so that yes, intelligence can certainly be present if, for instance, eyes or eardrums are not.

It’s well known that a child who is born deaf/blind is hugely disadvantaged developmentally, than a child who — through illness — becomes deaf/blind.

Certainly. However, you are conflating the ability to progress with a lack of intelligence — what is happening here is that the learning is done with fewer tools, and via more rarely traveled paths, so that of course the learning is different in character and degree; but this does not, in any way, imply that the individual is not intelligent. It simply demonstrates that learning through different channels results in (no surprise) different learning, and that this in turn isn't necessarily collinear with the learning of those around them. On the other side of this is the certain observation that a blind person uses their sense of sound in a far more sophisticated manner than a "normal" person does. Is this because they are less intelligent? No, it's because they have put their intelligence to work harder in one area than we usually are forced to. Intelligence is native; what you do with it is guided by the senses you have; if they are wide open to the usual range of human experiences, you will learn similarly to other humans. If not, you won't — but that doesn't mean you're unintelligent. A computer would, if designed by any means sensibly, have wide open channels - text, sound, vision — and so if indeed it is intelligent, it would be able to develop.

The fallacy of your argument is this: you have not taken account of the critical role of development in human intelligence.

No, I have fallen prey to no such fallacy. I assert that intelligence is there to start with (assuming no actual brain injury is also present), and that development is guided by the sensorium, while performed by intelligence. Change the sensorium, and you will certainly change the development. You can cripple it, even stop it. But you still will not have eliminated intelligence. Inside the sense-free individual, the mind still operates. What it is operating upon is the question. But the assertion that an AI, lacking the human sensorium, cannot be intelligent — that's indefensible. It will have "a" sensorium, one that has many things in common with ours. Would that make it different? Sure. But it wouldn't make it unintelligent.

There have been some pretty horrible animal experiments that establish that sensory deprivation from birth stunts development,

You are conflating development — the path the intelligence follows in gathering information and the tools it uses to do so — with the intelligence itself. These are entirely distinct things. A person born congenitally blind — never sighted — is neither inhuman or unintelligent. They are just different (and not so much that you wouldn't marry one.) Likewise congenitally deaf. Your argument is wrong right from the outset, because you assume that such facilities control intelligence; they don't. They can guide its development, but, if not present, other inputs guide intelligence, often to differing degrees as compared to a sighted or non-deaf person. An AI would have other inputs; and so it would be different — but it would still have guides and so its intelligence would still develop.

Perhaps you are thinking that we can sidestep the whole tedious process of development in AI? If only we can design our fully fledged and capable AI so that as soon as we throw its on-switch it is conscious and thinking..? Yes, its a tantalising thought, but all of the evidence suggests that artificial intelligence (just like natural intelligence) also needs to be embodied and, in all likelihood, will need to go through a developmental process too.

No. I'm thinking that once an AI has developed — past tense — to a degree we (and perhaps it) is satisfied with, then we can copy it wholesale. That's just a technical issue, and is perfectly reasonable. I agree, development is required. But that won't be true for each individual. So it isn't about designing development in; it's about developing an agreeable result, and then copying that result.

First, only an embodied intelligent agent is full validated as one that can deal with the real world.

That is completely insupportable, unless by "embodied" we accept any remote manipulation, and in that case, an AI would be more embodied than we are. A wider, more powerful sensorium, faster reflexes, better memory... this is, at best, an argument for AI.

Second, only through a physical grounding can any internal symbolic or other system find a place to bottom out, and give ‘meaning’ to the processing going on within the system.

This says nothing about whether an AI could develop such a grounding, and as such is irrelevant.

Brooks goes on the ask the question “can there be disembodied mind?” He concludes that there cannot.

He is wrong. 🙂

without an ongoing participation and perception of the world there is no meaning for an agent

Even if true, which is still highly debatable... do you suddenly have "no meaning" when in a sensory deprivation tank? No, of course not. So the above quoted assertion is probably only philosophical pin-dancing. Despite that, the above doesn't apply to an AI at all, which would be fully capable of participating in, and experiencing perception of, the world.

A free-running disembodied AI system will forever regress as it tries to reason about knowledge. Like a child, perhaps, who just answers every reply to a question with another question “why?”.

This is not subject matter I have addressed. I cannot imagine why anyone would try to create such a thing. What would be the point? It only seems intentionally cruel.

So, as I have maintained all along — it has not been demonstrated in any way that we will need a human body or form to create an AI.

Again, thanks for writing. I urge you to consider the flaws in your position I've illuminated here. Intelligence is not perception. Development is not intelligence. Perception is not development. Intelligence is the ability to reason; Perception is the ability to bring information to the intelligence; Development is change of intelligence through any mechanism, including acquisition of information and induction. None of this speaks to any need for human bodily form or the specific human sensorium.

0.02 [Cached]

Since you've quoted me I feel I must respond:

With the greatest respect, I think the arguments you have set out here are wrong. Let me try and explain why.

Firstly you say:

I agree, they do not. But you assume they have acquired intelligence before becoming deaf, blind, etc. It's well known that a child who is born deaf/blind is hugely disadvantaged developmentally, than a child who - through illness - becomes deaf/blind.

You go on to say

and then

The fallacy of your argument is this: you have not taken account of the critical role of development in human intelligence. We do not emerge from our mothers' wombs fully cognitively mature, or even fully conscious - in that our sense of self is something that doesn't emerge until we are several months old, then language starts to appear after 15 months or so.

That developmental process needs a body and its sensorium. One of the first things babies do is 'body babbling', apparently random movements which appear to be part of the process of learning the body's boundaries (me and not me), and how to control it. There have been some pretty horrible animal experiments that establish that sensory deprivation from birth stunts development, and our own human experience is that human infants who experience a rich and stimulating environment do very much better than those who suffer impoverished environments in early months and years.

You go on to say

For the reasons I've given I do not think you have established this at all, at least not for intelligence in general. This is, however, the first mention of AI in your essay. Perhaps you are thinking that we can sidestep the whole tedious process of development in AI ? If only we can design our fully fledged and capable AI so that as soon as we throw its on-switch it is conscious and thinking...? Yes, it's a tantalizing thought, but all of the evidence suggests that artificial intelligence (just like natural intelligence) also needs to be embodied and, in all likelihood, will need to go through a developmental process too.

For a very clear (and highly readable) paper that discusses embodiment in some depth read Rodney Brooks' 1991 paper "Intelligence without reason", which you can find in his excellent book "Cambrian Intelligence: the early history of the new AI". I will quote Brooks (because he says it better than I can):

Brooks goes on the ask the question

He concludes that there cannot. He says

and that the key idea from embodiment is

I think Brooks (and others) are right. A free-running disembodied AI system will forever regress as it tries to reason about knowledge. Like a child, perhaps, who just answers every reply to a question with another question "why?".

To comment on your final paragraph. Although general purpose (or wide) AI remains a very hard problem, I think that - after a number of false starts and unfulfilled promises - we are beginning to understand it well enough to construct a plausible methodology. It's a very interesting new field called epigenetic robotics, that encompasses both embodiment and development.

Best wishes

Alan Winfield